MLOps – Fast-tracking AI Ethics?

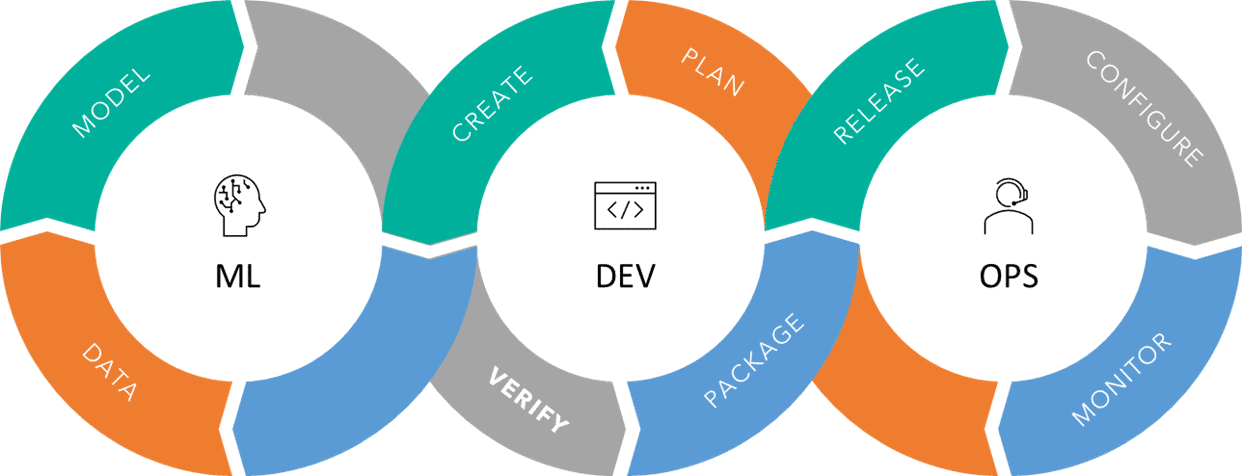

MLOps, the big-bet for scaling Machine Learning, promises seamless development through to in-life use of models using automated, DevOps CI/CD workflows, but what does this mean for an AI Ethics discipline that has focused its fire-power on single-shot development projects?

In this series of posts I’m looking at some of the effort involved with implementation of AI Ethics, covering some key considerations for the board C-Suite, and some perhaps overlooked realities of applying AI Ethics principles to real-world adoption.

MLOps – DevOps for Machine Learning

The benefits of DevOps, a working approach that fuses IT development and operations into a seamless, highly-automated feedback loop of Continuous Integration, Delivery & Deployment, are coming to Machine Learning. It’s fair to note that as a nascent capability Machine Learning can struggle with linkage between the roles through data analyst, data scientist, and handover to systems implementation for packaging and release to real-world use. MLOps promises the same level of code-free integration, heavy on automation, as DevOps, allowing mature, traced and near real-time release of models in their packaged applications. That’s a far cry from the linear, single-shot development waterfall assumed by many of the AI Ethics frameworks and approaches.

MLOps = ML + Dev + Ops

Baking in ethical requirements

So how do we ensure that the highly relevant considerations of ethics and responsible-use remain represented throughout the MLOps cycle? Here’s some tips:

Harness development tracking. Good news, MLOps brings traceability goodness, if done right. Using an end-to-end workflow tool to manage the development, packaging and deployment of models in applications brings with it clear paper-trails of data sources, models used, training approach employed, etc. When something DOES go wrong, we can review the source of the problem quickly and adjust course. Mix-and-match and this transparency can be lost.

Use ethics metadata. Rich metadata on models in repositories is an investment that will repay handsomely. Ensure this includes information that will allow application developers to make informed decisions on use, including qualitative data, social reaction, social-acceptability testing, sensitivity (human) of prediction, etc as well as the usual suspects on technical specification.

Streamline testing of NFRs. Testing automation requires codification of requirements. Although some aspects of ethical considerations can be codified and automatically tested (for example, measures of bias in model predictions), many aspects are external Non-Functional-Requirements (external NFRs). NFRs are often tested manually, and some can be highly subjective. To get this right, ensure your CI environment is ready to test ethical NFRs. The sensitivity of the application, data or prediction should guide on the required threshold to trigger NFR review.

Ensure you monitor live-data models closely. Dynamic data and evolving models bring an extra dimension, requiring ongoing probing of intended mitigations for ethical risk during operations monitoring to ensure the model is adhering to the expected behaviour that got it to production. As the user may have spent some time customising, roll-back may have a significant impact on user experience. There’s a trade-off here on predicting unintended use and input vs. accepting risk of misbehaviour; where that balance sits is an informed decision to be made as part of specification of requirements.

Strengthen and empower the requirements/testing combo. The interworking between product owner, regulatory assurance and experience assurance (XA) roles is the lynchpin for assuring representation of ethics considerations. The product owner represents in their requirements not only the intended functionality for the customer, but also the voice of the societal license to operate, the policies of the organisation with respect to acceptable use, and the values the organisations espouses. The XA lead ensures these are fully represented in the final experience of the product. The regulatory lead ensures the representation of requirements with respect to human rights and other application regulations. These roles must be fully trained in understanding ethical concerns in the use of ML tech.

Ethical MLOps is only as good as its organisation

It’s important to note that a well-formed MLOps structure operating in an organisation that has not addressed the up and downstream drivers of ethical and responsible outcomes will fail. Most importantly, product owners who have no direction from leadership; no agreed norms of responsible use to reference; a lack of input sourced from the societal-context of the deployed product; and conflict between a company’s communicated values and the behaviours that bring success at the organisation will fail.

Ultimately, in addition to expanding the goals of the product, and so the roles of the team, MLOps makes demands that the business is positioned and conversant in understanding the concerns of responsible use to empower the decision makers in an MLOps project to make informed decisions.

TechInnocens provides C-Suite and board level advice on the implementation of AI Ethics frameworks using pragmatic, achievable interventions grounded in the reality of technology adoption.